Vast amount of information exists across the interminable webpages that exist online. Much of this information are “unstructured” text that may be useful in our analyses. This section covers the basics of scraping these texts from online sources. Throughout this section I will illustrate how to extract different text components of webpages by dissecting the Wikipedia page on web scraping. However, its important to first cover one of the basic components of HTML elements as we will leverage this information to pull desired information. I offer only enough insight required to begin scraping; I highly recommend XML and Web Technologies for Data Sciences with R and Automated Data Collection with R to learn more about HTML and XML element structures.

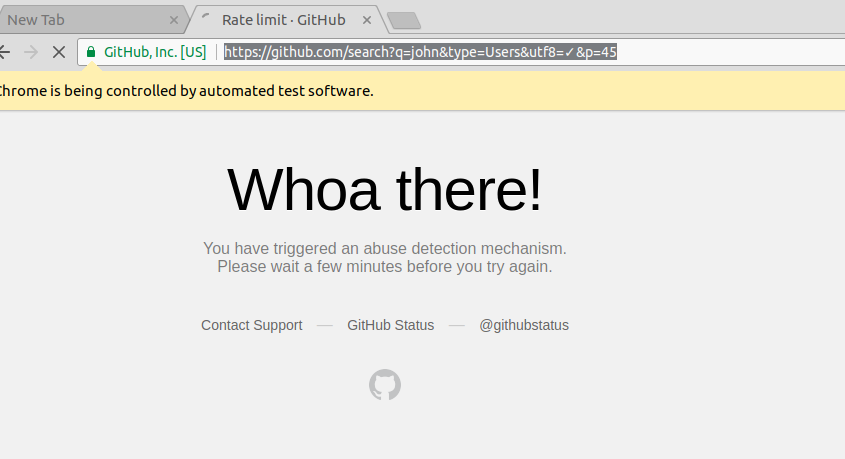

Video / r-bloggers / automation / web scraping In this R tutorial, We’ll learn how to schedule an R script as a CRON Job using Github Actions. Thanks to Github Actions, You don’t need a dedicated server for this kind of automation and scheduled tasks. Web Scraping with requests and BeautifulSoup. We will use requests and BeautifulSoup to access and scrape the content of IMDB's homepage. What is BeautifulSoup? It is a Python library for pulling data out of HTML and XML files. It provides methods to navigate the document's tree structure that we discussed before and scrape its content. A few weeks back I was faced with a challenge that was basically to use webscraping to get all the files of a GitHub repository and group them by extension and sum.

HTML elements are written with a start tag, an end tag, and with the content in between: <tagname>content</tagname>. The tags which typically contain the textual content we wish to scrape, and the tags we will leverage in the next two sections, include:

Web Crawling and Scraping using R - GitHub Pages. Ptext 5 ## 1 'Web scraping is the process of automatically collecting information from the World Wide Web. It is a field with active developments sharing a common goal with the semantic web vision, an ambitious initiative that still requires breakthroughs in text processing, semantic understanding, artificial intelligence and human-computer interactions.

<h1>,<h2>,…,<h6>: Largest heading, second largest heading, etc.<p>: Paragraph elements<ul>: Unordered bulleted list<ol>: Ordered list<li>: Individual List item<div>: Division or section<table>: Table

For example, text in paragraph form that you see online is wrapped with the HTML paragraph tag <p> as in:

It is through these tags that we can start to extract textual components (also referred to as nodes) of HTML webpages.

Scraping HTML Nodes

To scrape online text we’ll make use of the relatively newer rvest package. rvest was created by the RStudio team inspired by libraries such as beautiful soup which has greatly simplified web scraping. rvest provides multiple functionalities; however, in this section we will focus only on extracting HTML text with rvest. Its important to note that rvest makes use of of the pipe operator (%>%) developed through the magrittr package. If you are not familiar with the functionality of %>% I recommend you jump to the section on Simplifying Your Code with %>% so that you have a better understanding of what’s going on with the code.

To extract text from a webpage of interest, we specify what HTML elements we want to select by using html_nodes(). For instance, if we want to scrape the primary heading for the Web Scraping Wikipedia webpage we simply identify the <h1> node as the node we want to select. html_nodes() will identify all <h1> nodes on the webpage and return the HTML element. In our example we see there is only one <h1> node on this webpage.

To extract only the heading text for this <h1> node, and not include all the HTML syntax we use html_text() which returns the heading text we see at the top of the Web Scraping Wikipedia page.

If we want to identify all the second level headings on the webpage we follow the same process but instead select the <h2> nodes. In this example we see there are 10 second level headings on the Web Scraping Wikipedia page.

Next, we can move on to extracting much of the text on this webpage which is in paragraph form. We can follow the same process illustrated above but instead we’ll select all <p> nodes. This selects the 17 paragraph elements from the web page; which we can examine by subsetting the list p_nodes to see the first line of each paragraph along with the HTML syntax. Just as before, to extract the text from these nodes and coerce them to a character string we simply apply html_text().

Not too bad; however, we may not have captured all the text that we were hoping for. Since we extracted text for all <p> nodes, we collected all identified paragraph text; however, this does not capture the text in the bulleted lists. For example, when you look at the Web Scraping Wikipedia page you will notice a significant amount of text in bulleted list format following the third paragraph under the Techniques heading. If we look at our data we’ll see that that the text in this list format are not capture between the two paragraphs:

This is because the text in this list format are contained in <ul> nodes. To capture the text in lists, we can use the same steps as above but we select specific nodes which represent HTML lists components. We can approach extracting list text two ways.

First, we can pull all list elements (<ul>). When scraping all <ul> text, the resulting data structure will be a character string vector with each element representing a single list consisting of all list items in that list. In our running example there are 21 list elements as shown in the example that follows. You can see the first list scraped is the table of contents and the second list scraped is the list in the Techniques section.

An alternative approach is to pull all <li> nodes. This will pull the text contained in each list item for all the lists. In our running example there’s 146 list items that we can extract from this Wikipedia page. The first eight list items are the list of contents we see towards the top of the page. List items 9-17 are the list elements contained in the “Techniques” section, list items 18-44 are the items listed under the “Notable Tools” section, and so on.

At this point we may believe we have all the text desired and proceed with joining the paragraph (p_text) and list (ul_text or li_text) character strings and then perform the desired textual analysis. However, we may now have captured more text than we were hoping for. For example, by scraping all lists we are also capturing the listed links in the left margin of the webpage. If we look at the 104-136 list items that we scraped, we’ll see that these texts correspond to the left margin text.

If we desire to scrape every piece of text on the webpage than this won’t be of concern. In fact, if we want to scrape all the text regardless of the content they represent there is an easier approach. We can capture all the content to include text in paragraph (<p>), lists (<ul>, <ol>, and <li>), and even data in tables (<table>) by using <div>. This is because these other elements are usually a subsidiary of an HTML division or section so pulling all <div> nodes will extract all text contained in that division or section regardless if it is also contained in a paragraph or list.

Scraping Specific HTML Nodes

However, if we are concerned only with specific content on the webpage then we need to make our HTML node selection process a little more focused. To do this we, we can use our browser’s developer tools to examine the webpage we are scraping and get more details on specific nodes of interest. If you are using Chrome or Firefox you can open the developer tools by clicking F12 (Cmd + Opt + I for Mac) or for Safari you would use Command-Option-I. An additional option which is recommended by Hadley Wickham is to use selectorgadget.com, a Chrome extension, to help identify the web page elements you need1.

Once the developer’s tools are opened your primary concern is with the element selector. This is located in the top lefthand corner of the developers tools window.

Once you’ve selected the element selector you can now scroll over the elements of the webpage which will cause each element you scroll over to be highlighted. Once you’ve identified the element you want to focus on, select it. This will cause the element to be identified in the developer tools window. For example, if I am only interested in the main body of the Web Scraping content on the Wikipedia page then I would select the element that highlights the entire center component of the webpage. This highlights the corresponding element <div> in the developer tools window as the following illustrates.

I can now use this information to select and scrape all the text from this specific <div> node by calling the ID name (“#mw-content-text”) in html_nodes()2. As you can see below, the text that is scraped begins with the first line in the main body of the Web Scraping content and ends with the text in the See Also section which is the last bit of text directly pertaining to Web Scraping on the webpage. Explicitly, we have pulled the specific text associated with the web content we desire.

Using the developer tools approach allows us to be as specific as we desire. We can identify the class name for a specific HTML element and scrape the text for only that node rather than all the other elements with similar tags. This allows us to scrape the main body of content as we just illustrated or we can also identify specific headings, paragraphs, lists, and list components if we desire to scrape only these specific pieces of text:

Cleaning up

With any webscraping activity, especially involving text, there is likely to be some clean up involved. For example, in the previous example we saw that we can specifically pull the list of Notable Tools; however, you can see that in between each list item rather than a space there contains one or more n which is used in HTML to specify a new line. We can clean this up quickly with a little character string manipulation.

Similarly, as we saw in our example above with scraping the main body content (body_text), there are extra characters (i.e. n, , ^) in the text that we may not want. Using a little regex we can clean this up so that our character string consists of only text that we see on the screen and no additional HTML code embedded throughout the text.

So there we have it, text scraping in a nutshell. Although not all encompassing, this section covered the basics of scraping text from HTML documents. Whether you want to scrape text from all common text-containing nodes such as <div>, <p>, <ul> and the like or you want to scrape from a specific node using the specific ID, this section provides you the basic fundamentals of using rvest to scrape the text you need. In the next section we move on to scraping data from HTML tables.

You can learn more about selectors at flukeout.github.io↩

You can simply assess the name of the ID in the highlighted element or you can right click the highlighted element in the developer tools window and select Copy selector. You can then paste directly into

html_nodes()as it will paste the exact ID name that you need for that element. ↩

This tutorial covers how to extract and process text data from web pages or other documents for later analysis. The automated download of HTML pages is called Crawling. The extraction of the textual data and/or metadata (for example, article date, headlines, author names, article text) from the HTML source code (or the DOM document object model of the website) is called Scraping. For these tasks, we use the package “rvest”.

- Download a single web page and extract its content

- Extract links from a overview page

- Extract all articles to corresponding links from step 2

Create a new R script (File -> New File -> R Script) named “Tutorial_1.R”. In this script you will enter and execute all commands. If you want to run the complete script in RStudio, you can use Ctrl-A to select the complete source code and execute with Ctrl-Return. If you want to execute only one line, you can simply press Ctrl-Return on the respective line. If you want to execute a block of several lines, select the block and press Ctrl-Return.

Tip: Copy individual sections of the source code directly into the console (2) and run it step by step. Get familiar with the function calls included in the Help function.

First, make sure your working directory is the data directory we provided for the exercises.

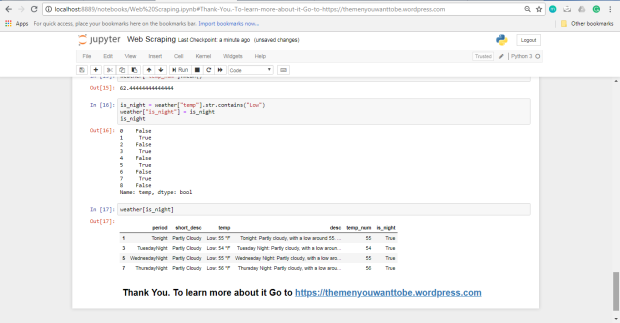

Modern websites often do not contain the full content displayed in the browser in their corresponding source files which are served by the webserver. Instead, the browser loads additional content dynamically via javascript code contained in the original source file. To be able to scrape such content, we rely on a headless browser “phantomJS” which renders a site for a given URL for us, before we start the actual scraping, i.e. the extraction of certain identifiable elements from the rendered site.

If not done yet, please install the webdriver package for R and install the phantomJS headless browser. This needs to be done only once.

Now we can start an instance of PhantomJS and create a new browser session that awaits to load URLs to render the corresponding websites.

In a first exercise, we will download a single web page from “The Guardian” and extract text together with relevant metadata such as the article date. Let’s define the URL of the article of interest and load the rvest package, which provides very useful functions for web crawling and scraping.

A convenient method to download and parse a webpage provides the function read_html which accepts a URL as a parameter. The function downloads the page and interprets the html source code as an HTML / XML object.

3.1 Dynamic web pages

To make sure that we get the dynamically rendered HTML content of the website, we pass the original source code dowloaded from the URL to our PhantomJS session first, and the use the rendered source.

NOTICE: In case the website does not fetch or alter the to-be-scraped content dynamically, you can omit the PhantomJS webdriver and just download the the static HTML source code to retrieve the information from there. In this case, replace the following block of code with a simple call of html_document <- read_html(url) where the read_html() function downloads the unrendered page source code directly.

3.2 Scrape information from XHTML

HTML / XML objects are a structured representation of HTML / XML source code, which allows to extract single elements (headlines e.g. <h1>, paragraphs <p>, links <a>, …), their attributes (e.g. <a href='http://...'>) or text wrapped in between elements (e.g. <p>my text...</p>). Elements can be extracted in XML objects with XPATH-expressions.

XPATH (see https://en.wikipedia.org/wiki/XPath) is a query language to select elements in XML-tree structures. We use it to select the headline element from the HTML page. The following xpath expression queries for first-order-headline elements h1, anywhere in the tree // which fulfill a certain condition [...], namely that the class attribute of the h1 element must contain the value content__headline.

The next expression uses R pipe operator %>%, which takes the input from the left side of the expression and passes it on to the function ion the right side as its first argument. The result of this function is either passed onto the next function, again via %>% or it is assigned to the variable, if it is the last operation in the pipe chain. Our pipe takes the html_document object, passes it to the html_node function, which extracts the first node fitting the given xpath expression. The resulting node object is passed to the html_text function which extracts the text wrapped in the h1-element.

Let’s see, what the title_text contains:

Now we modify the xpath expressions, to extract the article info, the paragraphs of the body text and the article date. Note that there are multiple paragraphs in the article. To extract not only the first, but all paragraphs we utilize the html_nodes function and glue the resulting single text vectors of each paragraph together with the paste0 function.

The variables title_text, intro_text, body_text and date_object now contain the raw data for any subsequent text processing.

Usually, we do not want download a single document, but a series of documents. In our second exercise, we want to download all Guardian articles tagged with “Angela Merkel”. Instead of a tag page, we could also be interested in downloading results of a site-search engine or any other link collection. The task is always two-fold: First, we download and parse the tag overview page to extract all links to articles of interest:

Second, we download and scrape each individual article page. For this, we extract all href-attributes from a-elements fitting a certain CSS-class. To select the right contents via XPATH-selectors, you need to investigate the HTML-structure of your specific page. Modern browsers such as Firefox and Chrome support you in that task by a function called “Inspect Element” (or similar), available through a right-click on the page element.

Now, links contains a list of 20 hyperlinks to single articles tagged with Angela Merkel.

But stop! There is not only one page of links to tagged articles. If you have a look on the page in your browser, the tag overview page has several more than 60 sub pages, accessible via a paging navigator at the bottom. By clicking on the second page, we see a different URL-structure, which now contains a link to a specific paging number. We can use that format to create links to all sub pages by combining the base URL with the page numbers.

Now we can iterate over all URLs of tag overview pages, to collect more/all links to articles tagged with Angela Merkel. We iterate with a for-loop over all URLs and append results from each single URL to a vector of all links.

An effective way of programming is to encapsulate repeatedly used code in a specific function. This function then can be called with specific parameters, process something and return a result. We use this here, to encapsulate the downloading and parsing of a Guardian article given a specific URL. The code is the same as in our exercise 1 above, only that we combine the extracted texts and metadata in a data.frame and wrap the entire process in a function-Block.

Now we can use that function scrape_guardian_article in any other part of our script. For instance, we can loop over each of our collected links. We use a running variable i, taking values from 1 to length(all_links) to access the single links in all_links and write some progress output.

Github C# Web Scraping

The last command write the extracted articles to a CSV-file in the data directory for any later use.

Try to perform extraction of news articles from another web page, e.g. https://www.spiegel.de or https://www.nytimes.com.

For this, investigate the URL patterns of the page and look into the source code with the `inspect element’ functionality of your browser to find appropriate XPATH expressions.

Github Web Template

2020, Andreas Niekler and Gregor Wiedemann. GPLv3. tm4ss.github.io